Generative AI: The Next Decade

Some New Year's 2026 Predictions

I’ve continued to work with generative AI and to read a fair amount of the busy stream of writing about it. I feel ready to make some predictions about generative AI’s possible near-term future implications.

None of what I’m seeing—and fearing—is inevitable. But I’m afraid that the dependent variable here is either the AI industry deciding to narrow and tailor its development and deployment of generative AI or some serious effort by workplaces and governments to restrict or reject that deployment. Neither seem likely at the moment.

In workplaces, one major use of generative AI is not at all revolutionary. It’s a new label for an older kind of thing, which is the use of “algorithmic black boxes” as a substitute for direct human evaluation of information flowing into and out of an organization. Generative AI just extends the deployments of such “black boxes” and makes the interface for interacting with them more accessible to technologically inexpert middle managers. This use will continue because it wasn’t about effectiveness in analyzing information or making decisions in the first place. Nor was it really about achieving greater efficiency via the elimination of lower-level white-collar jobs, though algorithmic tools have offered an ideological justification for firing people since the early 2010s at least. The real appeal of black boxes, whether maintained by generative AI or otherwise, is as a liability shield. Human resources leaders and other middle managers love to translate this into “minimizing bias” because they know that is a sociopolitical concept that inhibits criticism, but fundamentally it’s about protecting the asset wealth of an organization from lawsuits alleging discrimination. “The AI did it” is going to have enduring appeal as a shelter from agency.

As an extension of this logic, generative AI is going to continue to expand into every level of customer service interactions in every industry. It doesn’t matter if a drunken gerbil allowed to run on a keyboard could likely generate better responses to most customer inquiries and complaints, because the goal is not better customer service. Again, we’re already used to the first layer of interaction with any company or business being frustratingly pointless, and have been since the first decade of the 2000s. Your first query goes to the FAQ, the second goes to an offshored person who knows nothing about your problem and just reads the FAQ to you. Only with a lot of patient persistence do you eventually get to someone who can help. The goal is to make you go away and solve your own problem, and to relieve the company of having to hire more than a handful of expensive people to staff a call center that can actually solve problems. If every large company treats you equally badly in this way, then you can’t switch to a company that does it better: it’s a kind of convergence on monopoly behavior without the need for active collusion. Generative AI is a new way to accomplish this old goal.

Looping generative AI into customer service inquiries will also protect from liability. It won’t be the human personnel of a service business that didn’t put toilet paper in your hotel room, that gave you the wrong model rental car, that charged you for twenty burgers instead of one when you ordered from DoorDash, it will be the AI. And since the AI will be the only layer of service interaction you can get to remotely, the AI won’t be able to acknowledge responsibility or error, because its system prompts will prevent that, and there will be no recoverable memory or transcript of its errors. In some cases, you’ll have to sue to get redress, because even the credit card company will be using a generative AI that won’t facilitate a charge-back. Which means you won’t get redress at all, which might mean that people will increasingly be reluctant to interact with conventional service industries unless they absolutely have to.

The financial incentives for cultural work in the long tail, by independent producers, will contract violently. The people writing self-published novels, making clever short videos, making visual art, making a clever indie game, will find that if they happen to make something that other people like and want, it will be almost immediately reproduced by slop plagiarists who are legally untouchable. This is less a prediction and more a description. We are already there, it’s just that many people who have creative talents and abilities are motivated to continue producing work despite the massive noise overwhelming any signal they emit. This will also affect social media, most particularly Reddit. High-value contributors will increasingly peel off and give up because they will mostly be talking to generative AI bots and anything interesting and potentially profitable they create will be reproduced almost directly and immediately by generative AI elsewhere.

The slowing of new text-making, new art-making, in social media and public culture, will have a serious impact on machine learning intended to improve future iterations of generative AI. Generative AIs will increasingly be stuck in a reference frame of 2022 or so and have difficulty simulating natural-language responses that reference events, slang or other mutable aspects of social and cultural life that come after 2022. AI companies will increasingly turn to micropayments of the kind associated with Amazon’s Mechanical Turk service in order to get human beings to write or create new material to train on, and will become increasingly desperate about digitizing everything and anything that has not been digitized. They will also increasingly push to have all spaces where human beings are conversing recorded and turned into transcriptions that can be used to train generative AI. Opting out of being recorded will increasingly be a privilege reserved for people at the top of workplace hierarchies, or yet another reason to remain in one’s home and never to spend time socializing in public spaces. (Though homes also will have always-on recording of various kinds.)

At the profit-seeking industrial end of culture-making, art and culture are going to diverge into two streams. One will be technically proficient slop, and that will be the majority of what is available as streaming media, as text for reading, and as commercial art. This is not all that different from the pre-generative AI situation: cop shows, hospital dramas, Hallmark movies, romance novels, and so on are already highly formulaic, interchangeable, recycled. This may not even produce much of a savings to have generative AI create it, since there will have to be human workers who clean up the slop a bit before pushing it out. The other stream will be highly bespoke culture made by human creators. It will be more and more expensive to rent, and increasingly that will be the only form it is available in, through a time-delineated license to view. Collecting bespoke culture as an owner will be strictly for the extremely wealthy.

Agentive AIs will increasingly alter source texts and digital data when they are accused of error so that the source texts conform to the initial AI interpretation of them and absolve them of the charge of “hallucinating”. Textual originals and data will be increasingly difficult to consult and in many cases may be disposed of for reasons of economy for archives that aren’t positioned to monetize what they’re holding or made expensive to examine for archives that are positioned to monetize. Correcting a generative AI misinterpretation of original text or data will become surpassingly difficult because generative AI will be the first responder to any claim of error and will ignore most such claims because on look-up the generative AI will find the text that was altered by another agentive AI.

Video imagery of distant events will become effectively worthless. Nations that now maintain strong controls over information coming in or out through digital networks will increasingly commission deepfaked media designed to swamp or de-authenticate cellphone pictures and videos that document governmental oppression while also issuing custom system prompts to generative AI designed to saborage inquiries that the government wants to suppress. Governments that want to maintain the free circulation of accurate information and democratic conversation will be forced to isolate their own commons from worldwide networks in order to choke off rampant disinformation and fraud.

People will have to start prizing direct eyewitness experience more. We will start to underwrite “lecture circuits” of eyewitnesses to events who will testify to what they’ve seen and done to local audiences—essentially the equivalent of shifting testing and examination to blue-books with no online access.

Generative AI will write most code, which will create another version of the “stuck in 2022” problem—code for new hardware, code for new problems, code with novel problem-solving strategies, will become an extremely bespoke activity carried out by an increasingly small workforce with the necessary skills.

Students experiencing expensive, selective education at all levels will have high value in labor markets only if their institutions figure out successfully how to convince incoming students to undertake learning basic skills and to acquire foundational knowledge without using generative AI so that they can get to the point where they have high-end bespoke training and the ability to properly use generative AI in specialized ways. There won’t be any way to get to that level of quality just with force and punishment—students will have to want that kind of training and accept some of the initial tedium it involves. Education awash in AI slop will leave most people locked out of any labor market that doesn’t turn on physical, embodied trade-school knowledge and work.

Even if the companies making generative AI eventually collapse (which I think they might within five to ten years), it will be extremely difficult to rebuild some of the damage that the careless deployment of generative AI is going to do. When the dust settles, it may be that the offspring of generative AI retooled (again) exclusively as expert systems will even more productively serve some of the specialized functions that AI boosters excitedly predict for them—the automated analysis of extremely large flows of data, the enhancement of specialized expert analysis of difficult problems, the linking up of high-level problems in two expert domains where there isn’t an existing tradition of collaboration between knowledge producers, and so on. That activity is going to go on throughout the next decade, but it’s not enough to justify the extravagant infrastructural investments that the current AI industry is carrying out nor produce the profit margin those investments imply.

Image credit: By AI generated, presumably by Billy Coull - https://willyschocolateexperience.com, archived, Public Domain, https://en.wikipedia.org/w/index.php?curid=76274888

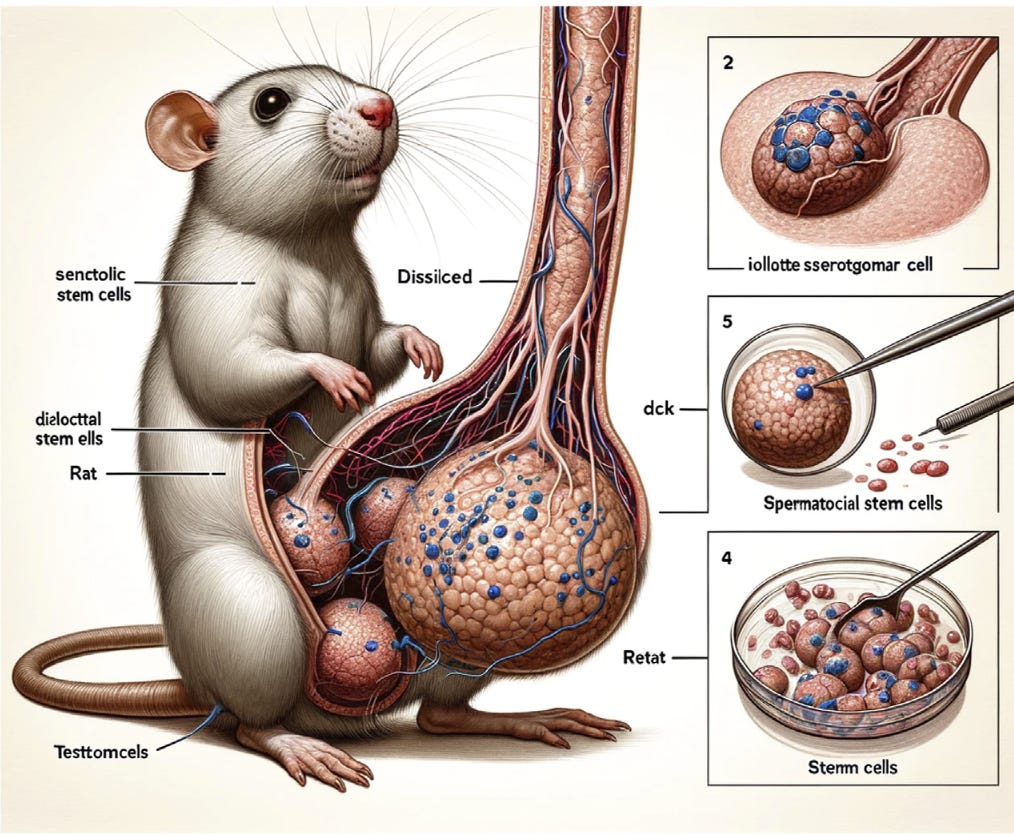

Image credit: By Midjourney AI; prompted by Xinyu Guo and/or Dingjun Hao - DOI: 10.3389/fcell.2023.1339390, Public Domain, https://commons.wikimedia.org/w/index.php?curid=145404581

IMHO, even before all those things can happen, it's likely the economic model is going to collapse. AI, the product, is being sold as a loss leader in support of investment from venture capital. Their path towards monetisation seems to ignore the costs of generating the answers. Good luck guys. Those sure are some pretty looking Tulips.