I wonder sometimes if people who do project management, or just management generally, are aware of Fred Brooks’ 1975 book The Mythical Man-Month. I’m not even sure that people in programming work know it any longer, though I think the core maxims of the book are still in circulation.

Brooks’ take on productivity was meant to be used in practical ways to improve project management. The essential insight was that adding more people to a project that was late didn’t speed it up. It slowed it down even more because of the labor required to integrate new people into the project workflow. In later revisions, he expanded some of these insights. Working on a second iteration of a project generally tempts people to add everything they took out the first time, which then makes that project expensive and slow. More importantly, he argued that one-simple-trick productivity shortcuts never work, that at some point a project takes as long as it takes. He also added something a bit like an auteur theory of project management, arguing that you need one person or a really small team of people who have a clear idea of what the project is, what it’s intended to do, what needs it is fulfilling, and that this project designer need to ruthlessly cut out contributions from people working on the project that don’t fit the idea, even if they are good contributions in and of themselves.

In the domains that Brooks meant to address, I think his insights remain as practical as ever. I see it all the time in various post-facto analyses of computer games, where projects went wrong because of feature bloat or too many developers pulling in different directions or the corporate management deciding to rush a game to market via adding people to the team and forcing crunch-time work, leading to the release of something that might have been an enduring classic but ends up being a forgettable failure.

I think you can use the book at a starting place for a much more expansive suspicion about managerialism, efficiency and austerity culture, however, even if that was not Brooks’ intention. We are at a moment where that perspective is profoundly necessary.

I am old enough that my productive life began before personal computers were widely available. I’m grateful to my mother for making me take a typing class in the summer during high school: it’s been a core skill that I’ve relied upon in almost every job I’ve had. I wrote my essays in college on a typewriter, using Liquid Paper, until my senior year when the computer science department (reluctantly) supported a set of networked terminals with word processors for the use of seniors writing theses. (There were terminals downstairs that you could use if you wanted to play Adventure as long as they weren’t needed by students doing programming work.) The year after I graduated, I had my first personal computer, a Macintosh, though my parents had bought one at home the year prior to that.

For many years, I was generally an early adopter of computer-based tools and processes, most particularly the use of networked computers for communication. I made avid use of email from a very early point in its history and participated in asynchronous discussions on early dial-up bulletin boards and Usenet. I didn’t know how to program, but I knew enough about computers as a user that I could enhance my value in working environments just by reading the fucking manual and making complex machines with bad interfaces work for other people. I worked in grad school as a temp and was in demand just because I could figure the tech out. At one office, they were doing market research with Scan-Tron forms and they’d bought an automated reader that was supposed to dump the scan results into Microsoft Excel (which was then a very new piece of software that their corporation had bought, replacing Lotus 1-2-3, which is what the older people in the office knew best.) No one used it, they entered the data by hand. When I just casually started using it after spending an hour getting it set up, they were electrified—how was that possible? Let’s keep that guy! Pay him more!

When I arrived at Swarthmore as an assistant professor, my predecessor had what was then a very antiquated Macintosh in his office, which he informed me “he’d never used”. I managed to get a newer machine installed not long afterwards, and folks in my department quickly understood that I and another colleague who arrived at the same time were the tech users who would constantly press to have access to new tools and better instructional tech infrastructure.

The early adopters got a kind of heady, alluring high off of a new device, a new application, a new technology. If you had a lot of comfort and facility with email in the 1980s through to the mid-1990s, you were communicating at warp speed while everyone else was just poking along through print communications. We had inter-departmental envelopes that passed around memos, letters, information one person at a time, one day at a time, while the emailers blasted through all the information, asked their questions, got their answers, made their point, in an hour or two. Because not everyone was on email, and those who were didn’t privilege it, the signal ratio was very high. It left you time in the day to use email for enriching discussions—this was the golden age of listservs, but it was also when you could use email as a substitute for a face-to-face meeting because if you had a discussion partner who was also a heavy email user and a fast typist, you could focus on a back-and-forth without multitasking or having to divide your attention.

Asynchronous threaded messaging was for me even more generative at that time. You could really sustain some great discussions with a fairly large group of people involved, and the format solved the problem of individuals speaking over one another—messages could go out simultaneously, there were fast streams of talk and slower ones. At its best, there was a kind of jazz to it all, an alluring dialogic rhythm. A lot could get done, a lot could be thought and said, information could be compiled at what felt like an impossible rate. In a diverse enough group in terms of life experiences and knowledge sets, you could get almost any question answered without the agonizing work of picking through reference books over in the physical library. I used to sort of laugh inside when I took students on a library tour and the librarians showed them all the bibliographies and encyclopedias and specialized reference, because I was discovering that a lot of that information I could get in targeted ways simply by being in the right online place at the right online time.

It took a long time for me to see the pattern that is now in hindsight clear to almost everybody my age, and to younger generations as well. As the advantages gained by the early adopters became clear, more people pushed voluntarily into those spaces. And then as the productivity increases jolted down the line, everybody else was pushed involuntarily. The advantage suddenly vanished. The noise was up, the signal was down. Everything was suddenly being done in email, and suddenly the speed of email dramatically increased the amount of information and communication you were expected to produce and conduct. You were suddenly answering questions from all over all day long. We were all working more and it stopped being magic.

If you’re as old as I am, you’ve now seen this cycle repeated multiple times. We are all in some sense the extra workers being added to a long-delayed project with the expectation that we will make it go faster and instead it gets slower and slower all the time. We are all bugs on the windshield in the race to get to full frictionless efficiency, splattering over and over again as that fictional, inhuman El Dorado shimmers in the distance, calling to those who dream of a world where they no longer have to hire human beings at all but who in the meantime are happy to make the people they pay do more and more work while pretending that in fact they are making it easier for us all.

In academia, this drive to nowhere takes on familiar shapes across campuses. We imitate one another, which provides a ready answer any time you ask “Why are we adopting this new system, this new process?” Answer: because it has become an industry-wide standard! Why did the first adopters do it, then? As well ask which came first, the chicken or the egg.

We never do an audit in advance of adopting a new platform, system or app of the anticipated changes to net workloads. If someone has hard empirical evidence that the new product is actually going to reduce everybody’s work, that’s strictly a private, unshared or wholly proprietary knowledge. Otherwise, the assurances of savings are assumptive, futureward, imagined. There’s a crass reason for this—the people buying the product, hiring the vendor, bringing in the consultancy, usually have a real person’s real job in mind as the immediate savings, as in “eliminating that job”. The more humane institutions are often content to wait for retirement or for someone to move on to add the savings; others are looking to re-assign or fire someone more immediately. But the deeper reasoning is more like religious faith. The new product is a kind of austerity eucharist, converting to the true body of efficiency as it passes into the institution’s digestive system.

In practice what happens is that the job formerly done by a person gets divided in a thousand tiny jobs and distributed to the entire workforce. In this act, it does not magically become less work. The sum remains the same. The hope is just that each person will be able to add it to their workflow and barely notice because it is just that one…tiny…wafer. The problem is that we are all the character from Monty Python’s Meaning of Life, being fed a mountain of tiny wafers until we are engorged to the breaking point.

And in the end it turns out that the old job has not merely been transformed from 100 to 1+1+1+1+1…=100. Almost invariably, the new process now requires hiring someone else to make it work. The institution will desperately try to weave and dodge to contain the damage, arguing that these new hires are temporary. (Usually justifying paying them less, but not always.) It’s just until we’ve got the kinks out of the system.

But if the system was a kludge to begin with, then in fact the numbers of people needed to keep it working at all with patches and migration plans and upgrades and new kinds of interoperability multiplies. It becomes a permanent priesthood, tending to the idol in its temple.

If the system that was purchased was a beautifully built marvel of software design, its vendor is almost certainly canny enough to recognize that they have the purchasing institution over the barrel. The institution had a sore heel and decided to use some software opiates to make the pain go away, only to find that the price of the drug increases by 100% a year. There’s no way back now: the person who did that job before in a human way is gone, the knowledge they once had passes beyond the veil. Again there’s no savings on labor—you’re just paying some other institution’s employees now. Plus, even if the system works as advertised, you’re likely to discover that you’ve just moved your bottlenecks somewhere else. Did you buy a great system for handling expense reimbursements and then make all your employees file their own? Ok, so maybe you don’t need the people in the office to sit there looking at requests for reimbursement. But you’d better have someone upstream looking at the expenses passing through the system unless you’ve decided that losing money through embezzlement and fraud is still less than paying human beings to receive those reimbursement requests. You’re up the ChromeRiver without a paddle.

Otherwise, what happens is you spend more and offload more work on fewer people. Which is more or less what the big AI investors are now trying to promise as the use-case of their product. Fire more people, increase the workload for the people remaining. Number go up.

The AI is not going to be doing those jobs itself. It’s going to need an army of people caring for it, directing it, fixing it, like a monster toddler set loose in a nightmare day care center. There will be employees who clean the AI’s poopy diapers on output, who feed it the informational gruel in a form it can digest, who see to its health, who entertain it and train it. And then there will be a new job for the person who is designated to convince you that you’re seeing a pinstriped executive doing a high-skill job, not a pooping, meandering toddler.

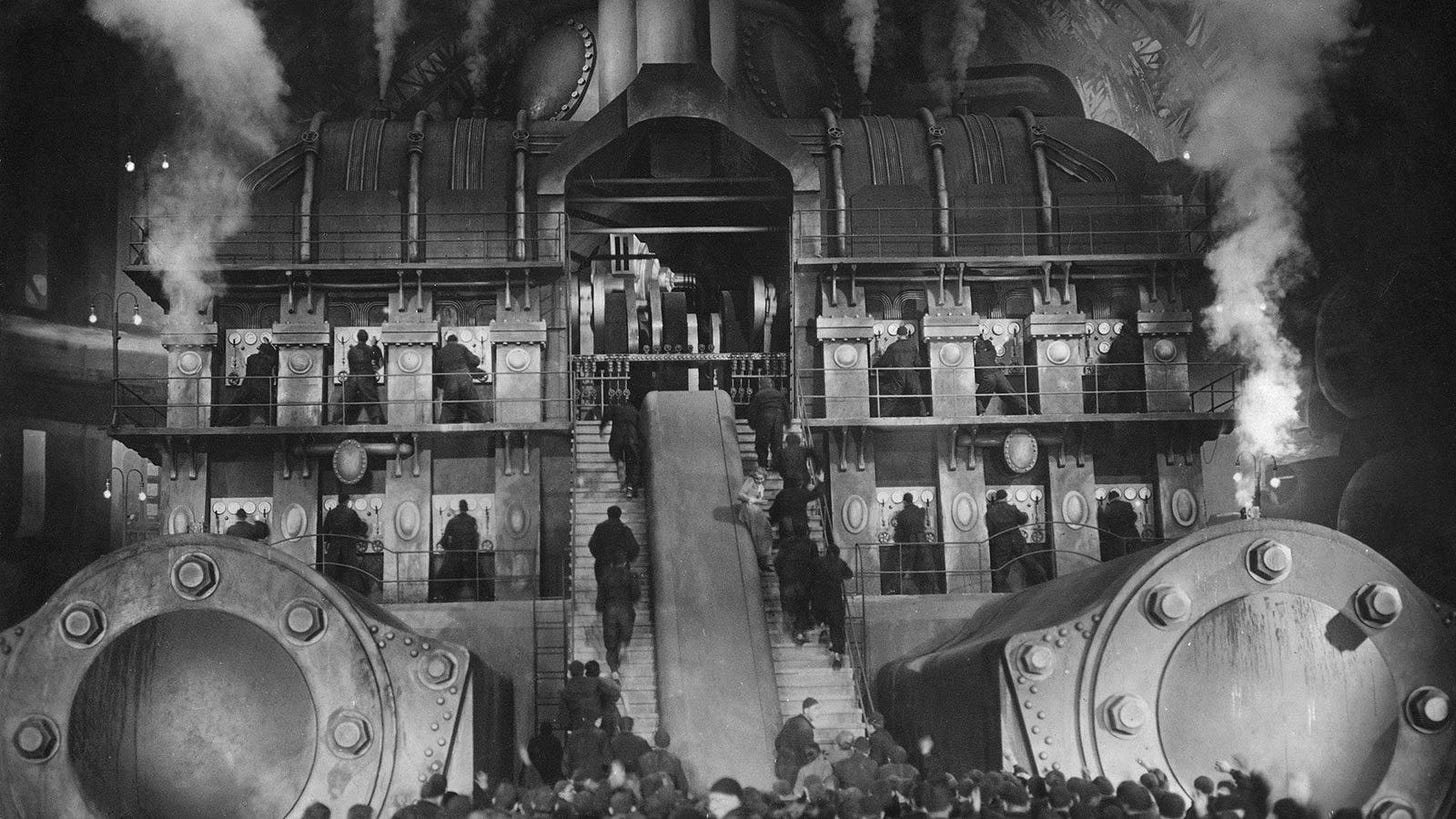

You might say, “Well, if the net impact is that more people are hired, what’s your complaint?” That’s what the proponents of chasing the new tech often promise, after all: sure, some jobs will go away, others will come in. The problem is that the net labor was distributed to all the existing workers without anybody acknowledging that. The technology is treated as if it made the work just disappear. So if you add more people later to keep the fiction of that disappearance alive, you’re not adding useful capacity. You’re just making the apparatus more convoluted than ever. There’s all sorts of growth possible that could extend the institution’s core mission, strengthen its central values, magnify its effectiveness, and instead what you’re doing is investing in an illusion, feeding dollars to the Moloch of austerity. “Just until we get this fully implemented” is a never-happens statement. And the whole thing loops and loops again. You buy the system and it turns out to need another system, and the next system requires once again dividing up one job into a thousand mini-jobs. Or maybe it requires everybody changing their workflow, with all the cognitive burden that entails, even though there’s nothing wrong with the outcomes of their existing work, because the new system doesn’t handle what everybody’s doing now.

Which is another part of the problem. I don’t want more people employed if the cost is living in a more and more convoluted kind of lie, when nobody has the blueprints for the complexity we’ve created, when we can’t even trace or enumerate the work we’re doing any longer. When I compare the range of tasks I have to accomplish on a busy day with what I did in the late 1990s, it staggers me. When I try to talk about it, I either get gaslit (do we have data about that?) or shrugged at, as if I’m complaining about the sunset or the air. Number goes up. It is the way of things.

We keep chasing the will-o-the-wisp not just because the people in charge have come to believe that the beatings of efficiency need to continue until the morale improves. It’s also because most of us have had those moments where everything did seem faster, better, cleaner because of a new device or tool. And because we are doing many things in objectively better ways. Every time I complain like this, someone points to that kind of change to remind me that we are better off. I just got off a plane yesterday and yes it is better to have tickets and boarding passes on my phone and to book my own tickets than to have to go to a travel agent and receive a ticket that is effectively money in my hand, non-replaceable if I lose it or it is stolen.

But even those things which are better don’t remain that way. We used to have people making our library catalog, working with people elsewhere who made the metadata, buy the cards for the card catalog. Then we had suddenly were in an era where if you had a modicum of skill with inputting keywords, you could get much better results, much more quickly, than painstakingly leafing through the card catalog. Then it all enshittified and you get something like this as the related subject links for an article that is about an incredibly specific subject—the Nigerian government banning gambling in 1979.

There’s a few words in there that belong, and the rest are laughably useless to any researcher, no matter what they’re interested in studying. So now I’m back to teaching about research as an arcane and unintuitive process, or more often, I’m just doing the first steps for the students so they can get going on the work that is a productive learning process for them.

All that is solid melts into air. We make gains, we lose them. We have magical, giddy days and weeks where everything seems easy and you can get so much done because you’re out there ahead of the pack, and then everybody catches up and we’re all doing more work for the same pay, the same rewards. Always additive, never subtractive. And again and again, when you ask, “Are we planning to collect data on the net impact?”, it’s as if you asked for something impossible, something never imagined before. A roc’s egg. A unicorn horn. Someday we’ll do that! Thank you for the suggestion! And it’s worse somehow when you feel like that’s a sincere reaction, because you realize this particular Moloch is on self-feeding mode, and even the decision-makers are holding shovels alongside the rest of us, ready to feed its inexhaustible maw.

Great book! One of the business classics, along with The Halo Effect and The Goal. For me it made me realize that when I adopt all these productivity techniques for writing, it's really just a method of entertaining myself. Balzac and Zola didn't need Scrivener to write their books--you don't actually gain anything of value in terms of output from these techniques. They're just a method of procrastination. But....procrastinating is fun, so I do it anyway. I just don't expect it to matter.

It's astonishing how little people actually study productivity. And then, when people do study it, how little those insights actually matter. Like you can do research from now until next Tuesday saying people can do just as much work in a four hour week, but that doesn't mean companies will institute four hour weeks. They just won't do it. These are cost savings and efficiency gains that we simply have no incentive, under our system, to capture.

*Sits down and writes for 2-3 hours every early morning, by hand in a paper-filled notebook. Sits at laptop and revises what was written (being able to type with 10 fingers) for 30-45 minutes every afternoon. Her spelling is better on the laptop but ignores attempts to change her grammar because she has no desire to sound like the grammar check thinks she should. Works for her.

Tim, those of us who span the eras can see the benefits from older regimes. I don’t even know if this could work for the middle aged, much less the youth. Don’t you have to know how to write by hand before you go all in on that? Typing this on my iPad with my index finger, now that I think of it. Don’t even ask what my texting looks like. Not giving up my paper and pen/pencil, though. I don’t need a charging station for those.